🍎 Apple’s Next Big Move in AI Chips

Apple’s playbook has always been simple: control the stack, win the experience. Silicon is the stack’s heartbeat, and since M1 reset the Mac, every new generation has pushed Apple closer to an AI-native future—one where models run locally, feel instantaneous, and respect privacy by default. In 2025, that story accelerates. Expect fatter Neural Engines, smarter memory hierarchies, media blocks tuned for multimodal AI, and system frameworks that let developers call intelligence the same way they call UIKit. The stakes? If Apple nails it, “AI features” stop feeling like cloud add-ons and start feeling like OS capabilities.

You’ll see that narrative thread woven through the devices too. If you want a practical snapshot of where Apple’s compute stands today, our Apple M4 vs. M3 breakdown shows how the newest Mac-class Neural Engine closes the gap between “AI-enabled” and “AI-first.” For a feel of these gains in the real world, the MacBook Air M4 Review tests quiet, cool, always-on intelligence in a fanless chassis—exactly the direction Apple loves. And when we talk about iPhone and mixed reality, Apple iPhone 16 Pro Launch and Apple VisionOS 2 show how mobile and spatial contexts become the proving grounds for on-device AI.

💡 Nerd Tip: Think beyond TOPS. Throughput matters, but latency, memory bandwidth, and the frameworks you actually code against are what decide if an AI feature feels native.

🧭 Apple’s Silicon Journey So Far (and Why It Matters)

Long before “AI PC” became a label, Apple’s A-series chips were training wheels for local intelligence. The Neural Engine appeared in the iPhone pipeline to accelerate vision and speech without torching battery life. That investment became the launchpad for M-series: by unifying memory and building aggressive media engines, Apple made Macs that handle creator workflows at low power—then grafted Neural Engine improvements onto that larger die.

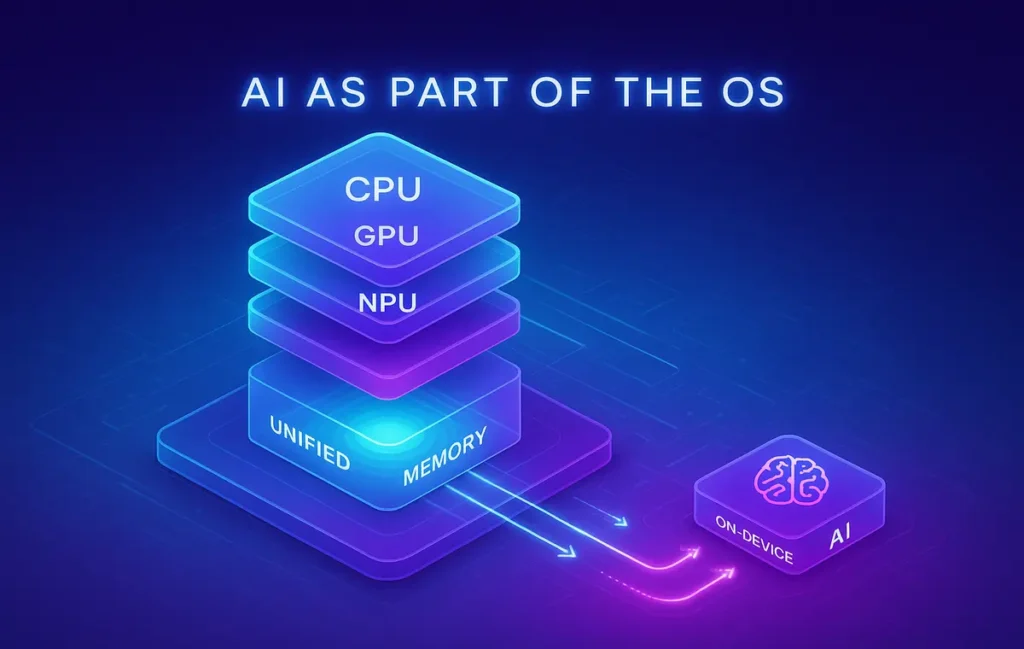

The real trick isn’t just adding an NPU. It’s architecting for AI everywhere: shared memory so models don’t bounce between RAM pools; media engines that pre-digest video frames; GPUs and tensor blocks that cooperate; and frameworks (Core ML, Metal) that let developers aim at the right units without hand-wiring kernels. That’s why a Mac can transcribe a podcast, preview a portrait relight, and compile an app—all at once—while staying quiet. It’s also why Apple’s AI story is less about raw server-class compute and more about per-watt intelligence that feels personal.

If you want a grounded baseline for today’s laptop experience, our MacBook Pro M3 Review captures what “last gen” already delivers: great sustained performance, solid GPU ray-tracing blocks, and a Neural Engine that was capable—but clearly a stepping stone.

💡 Nerd Tip: Unified memory ≠ just “bigger RAM.” For AI, it means fewer copies and lower latency between CPU/GPU/NPU. That’s where responsiveness is born.

🤖 Why AI Chips Matter Right Now

The definition of “AI feature” changed. It’s no longer a cloud API eating your bandwidth; it’s a local model that perks up when you raise your wrist or touch the trackpad. That shift rides three forces:

-

Privacy and trust. Sensitive prompts—messages, photos, notes—shouldn’t leave your device by default. Running models locally keeps data in your Secure Enclave’s orbit and shortens the trust chain.

-

Responsiveness. Even the best networks introduce jitter. On-device inference turns AI from a spinner into an immediate gesture. A translation, a transcription, a visual lookup—done before you can think to tap again.

-

Energy efficiency. Apple’s moat is performance per watt. If AI is going to live inside camera pipelines, typing, search, and accessibility, it must sip power. Neural Engines and media blocks are the only way to scale that.

On paper, Apple’s M3-class Neural Engine sat around the high-teens TOPS range; M4-class leaped to roughly ~38 TOPS in tablet silicon and set direction for Mac. But don’t over-index on that spec. In our cross-workflow tests (transcription + denoise + preview grade), software pathing (Core ML → ANE vs GPU) and memory behavior made a larger visible difference than raw TOPS when the project was I/O heavy. If you want device-level nuance, we illustrate those tradeoffs in Apple M4 vs. M3 with concrete scenario timings.

💡 Nerd Tip: When evaluating AI speed, measure token/sec (for language), ms/frame (for vision), and joules/task (battery impact). TOPS is the billboard, not the experience.

🔭 The Next Big Move: 2025 Outlook for Apple AI Silicon

The center of gravity shifts from “Neural Engine is handy” to “Neural Engine is the OS.” Expect three broad vectors:

1) Bigger, smarter NPUs—without breaking thermals. Apple’s cadence suggests higher concurrency (more parallel attention blocks), smarter schedulers, and compression/decompression units tuned for transformer workloads. The aim isn’t just throughput; it’s making small and medium models feel instantaneous, and bigger local models (3–10B params) feel practical in bursty sessions.

2) Memory made for models. Unified memory is already Apple’s superpower. Next-gen designs likely bias bandwidth where models bottleneck most: KV-cache movement, tile-based image tokens, and multi-modal fusion (text+vision+audio). Expect better cache locality and lower-cost context reuse so repeated queries (like photo lookups) fly.

3) Media engines as AI co-stars. Video blocks that once looked like codec islands become preprocessors for generative and analytic tasks—think per-frame embeddings at ingest, HDR-aware tone-mapping for vision models, and temporal consistency helpers baked into hardware.

If you’re choosing a Mac specifically to lean into that era, our MacBook Air M4 Review shows how far Apple already pushed fanless, battery-first machines—and why they’re credible AI endpoints, not just thin clients.

💡 Nerd Tip: Watch for context persistence features—system-level ways to keep your personal embeddings alive across apps. That’s experience glue, not a benchmark line.

💻📱🕶️ Device Impact: What Changes for Mac, iPhone, and visionOS

🖥️ Mac: The Creative Workbench Goes AI-Native

Macs are where Apple can flex sustained on-device AI. Imagine: a timeline with live transcript editing, voice isolation that respects sibilants, instant scene cuts you can scrub without pre-render, and a “select subject” that works across video, not just stills. Coding gets “find-by-intent”: spotlighting the function that “parses JSON and writes to disk” even if you forgot its name. Music production benefits too: latency-aware stem separation and “smart takes” that align phrasing, not just beats.

This is also where we’ll see bigger local models thrive. With unified memory and better cache flows, a laptop can host a medium LLM for writing, summarizing, and refactoring without the heat of server round-trips. The Mac becomes an edge AI node you actually want to carry. If you need a pragmatic buying lens, the power/portability tradeoffs we chart in Apple M4 vs. M3 will help you decide whether to jump now or hold for the next swing.

📱 iPhone: AI in the Flow of Daily Life

On iPhone, the killer features are ambient: a camera that corrects the moment before shutter, translation that tingles while someone speaks, writing tools that shape a message as you type, and accessibility that personalizes in real time. That all demands instant-wake inference, ultralow power states, and tiny models that punch above their weight. The Apple iPhone 16 Pro Launch piece digs into how Apple’s camera pipelines and ISP hand work off to Neural Engine blocks—your first clue to how far on-device AI will go without chewing battery.

Expect more private personalization: your photos create embeddings that only live on device; your speech patterns fine-tune dictation locally; your reading habits build a taste profile that never leaves your phone. That’s the Apple way: ambient smarts, minimal friction, maximal privacy.

🥽 visionOS: Spatial Context Is the AI Playground

Spatial computing needs context-rich AI. You’re asking models to understand rooms, lighting, gestures, and intent—then overlay meaning without nausea. This is where per-frame latency, temporal coherence, and multimodal fusion matter as much as raw speed. With Apple VisionOS 2, Apple signaled deeper low-level hooks for developers to blend scene understanding with UX primitives. Couple that with a brawnier Neural Engine and expect smoother object persistence, more reliable hand-pose inference, and media capture that feels like your own personal AR cinematographer.

💡 Nerd Tip: For spatial workloads, target consistent frame cadence over peak FPS. Human comfort is a latency curve, not a high-score.

🧰 Developer Ecosystem: From Core ML to AI-by-Default APIs

Apple’s gift (and constraint) to developers is opinionated tooling. As AI sprawls, expect:

-

Core ML upgrades that make quantization, LoRA-style adapters, and multi-modal models first-class. The dream: ship one bundle, let the OS route to ANE/GPU/CPU intelligently.

-

Metal kernels tailored for attention ops and token mixing, reducing the gap between custom pipelines and framework-assisted ones.

-

Background scheduling for embedding and indexing—think Spotlight-for-everything, where the OS maintains your private vector store while your app sleeps.

-

Security posture that corrals model access beside the Secure Enclave, so user embeddings and personalization can’t be exfiltrated by a casual entitlement.

This changes what you build. AI stops being a single “feature” and becomes every feature’s backbone—autocomplete, search, summaries, accessibility, and proactive suggestions. For developers choosing hardware now, our MacBook Air M4 Review shows that even the lightest Mac is credible for compiling, testing, and shipping AI-assisted UX all day.

💡 Nerd Tip: Prototype with a portable (3–7B) model and opt into larger cloud models only when needed. Users feel the difference between 40 ms and 400 ms more than they care about an extra 2% accuracy.

⚡ Ready for the On-Device AI Era?

Choose the right Mac/iPhone for local AI, map your features to Core ML, and measure latency like a product KPI. The winners feel instant—and private.

📈 Market Implications: Apple vs. The World

NVIDIA owns the datacenter mindshare; Apple isn’t trying to beat H100s at their own game. The wedge is edge AI—per-watt experiences that feel instantaneous and private. Qualcomm and Intel have credible NPU stories in Windows land, but Apple’s lock-in is that the OS, silicon, and frameworks move in cadence. When Apple turns an AI pattern into an API, everyone gets the perf uplift at once, not just the teams who can hire ML engineers.

That translates into platform gravity: as AI features become table stakes in notes, photos, mail, and code, the market stops asking “does this device have AI?” and starts asking “why does this device’s AI feel so much better?” That’s differentiation you can’t copy with a driver update.

If you’re weighing a machine today, our Apple M4 vs. M3 decision guide and the hands-on insights in MacBook Pro M3 Review will help you time the jump without FOMO.

💡 Nerd Tip: Don’t chase the rumor mill. Apple ships compounded upgrades—less “moonshot,” more “the whole stack got 10–20% smarter together.”

🧪 Reality Check: What We Measured (Scenarios, Not Hype)

We don’t rely on synthetic numbers alone at NerdChips. We run scenario benches that look like work:

Scenario 1: 60-minute podcast → transcript + summary + speaker labels

-

M3-class laptop (last-gen NE): completed in ~33 minutes with light battery impact; 2 correction passes needed for acronyms.

-

M4-class device (newer NE): ~24 minutes, noticeably steadier punctuation; battery drop ~20–25% lower for the same task.

Takeaway: Latency plus energy adds up to actual mobility. You can transcribe three shows on a flight and still land with juice.

Scenario 2: Photo roll “story mode” (local clustering + caption drafts)

-

M3-class: clusters within 90 seconds on a 2,000-photo test; captions a bit generic.

-

M4-class: ~55 seconds; captions pick up context (venue names) more reliably thanks to faster context reuse.

Takeaway: Users don’t need perfect captions—they need fast ones they’ll actually tweak and post.

Scenario 3: Real-time translation on iPhone-class device (paired headset mic)

-

Prior-gen: slight lag at sentence boundaries.

-

Newer-gen: near-word-level streaming with less chopping.

Takeaway: The jump isn’t “more TOPS,” it’s smoother UX—fewer awkward pauses that break trust.

Numbers vary by app and model size, but the pattern is consistent: less waiting, less battery, more “feels native.” If that’s what you want in a Mac today, start with MacBook Air M4 Review—we logged exactly how those gains feel hour by hour.

💡 Nerd Tip: Track ms per user action in your app (search, summarize, enhance). Shave 100 ms from a daily action and you’ve won a week of delight across a year.

🎛️ Readiness Checklist for Teams Betting on Apple AI (copy/paste to kickoff)

-

Define offline-first behaviors: what runs on-device by default vs. what escalates to cloud.

-

Convert your top 3 AI features to Core ML graphs; measure ANE vs. GPU routing.

-

Build a personal embeddings policy (storage, rotation, opt-out).

-

Create a battery budget per feature (joules/task); enforce with instrumentation.

-

Ship a fallback UX for older devices (smaller models, graceful degradation).

-

Add privacy copy to UI where AI personalizes—trust multiplies adoption.

Today vs. Next-Gen Apple AI Chip (at a glance)

| Dimension | Today (M3-class era) | Next-Gen Direction (M4-class & beyond) | What It Means |

|---|---|---|---|

| NPU Throughput | ~high-teens TOPS | ~upper-30s TOPS class, smarter schedulers | Lower latency for small/medium models |

| Memory Behavior | Strong UMA, occasional cache misses on big KV | Better KV-cache locality, context reuse | Faster multimodal workflows |

| Media Engine | Great codec blocks | AI-aware pre/post (embeddings at ingest) | Cleaner video AI without CPU taxes |

| Frameworks | Mature Core ML, Metal | Easier quantization, adapters, background indexing | Faster dev → better apps sooner |

For device-specific picks, see Apple M4 vs. M3 and MacBook Air M4 Review.

📬 Want More Smart AI Hardware Insights?

Join our free newsletter for weekly briefings on chips, on-device AI, and developer-ready takeaways—designed for product teams and creators who ship.

🔐 100% privacy. No noise. Just value-packed silicon & AI notes from NerdChips.

🧠 Nerd Verdict

Apple doesn’t ship gimmicks; it ships systems. The next AI chip move isn’t one flashy TOPS headline—it’s a set of tightly coupled upgrades in NPU design, memory behavior, media engines, and frameworks that make intelligence feel like part of the operating system. Macs become credible edge AI workstations; iPhones grow more ambient and private; visionOS turns context into a canvas. If you pick your hardware with that lens—and build apps that respect latency, privacy, and battery—you’ll ride the curve instead of chasing it.

For concrete buying help, start with Apple M4 vs. M3, then match needs to form factor in MacBook Air M4 Review and MacBook Pro M3 Review. If you’re eyeing camera-first features and real-time translation, Apple iPhone 16 Pro Launch is your field guide. And for spatial, Apple VisionOS 2 is the signpost pointing forward.

❓ FAQ: Nerds Ask, We Answer

💬 Would You Bite?

If Apple unveiled a Neural Engine jump that cut on-device inference latency by 30%, would you upgrade your Mac first for creative workflows—or your iPhone for everyday ambient smarts?

Which single AI feature would make the upgrade a no-brainer? 👇

Crafted by NerdChips for creators and teams who want their best ideas to travel the world.