🔥 Intro:

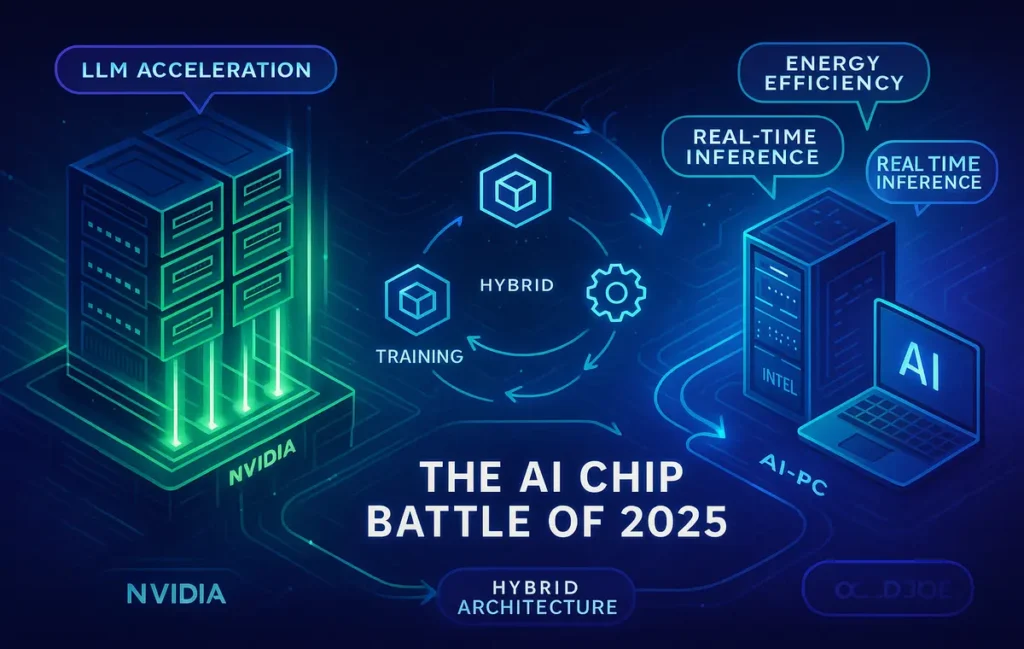

If the AI chip wars are a battlefield, two banners fly highest in 2025: Intel and NVIDIA. One commands AI with an iron grip on GPUs and the CUDA ecosystem. The other is counter-punching with dedicated AI accelerators, NPUs in mainstream laptops, and a revitalized foundry strategy. The question that matters to founders, developers, and ops teams is simple: who shapes the next wave of AI compute—and where should you place your bets?

💡 Nerd Tip: Think in projects, not brands. Your “winner” is the stack that shortens time-to-value for your specific workloads.

🧭 Context & Who It’s For

This is a news-meets-analysis guide created for hardware enthusiasts, CTOs, AI researchers, and investors who need a clear, practical read on the Intel vs NVIDIA duel. It’s not a broad industry roundup (you’ll find that in The AI Chip Wars); it’s a head-to-head on architectures, ecosystems, scaling realities, and how 2025’s decisions ripple across cost, performance, and developer velocity. Expect pragmatic takeaways, not hype. When relevant, we’ll point to complementary deep dives like On-Device AI Race: Apple, Qualcomm, Intel, NVIDIA—Who’s Winning What? and NVIDIA RTX 5090 Review for graphics-centric readers.

🧠 Why the AI Chip Battle Matters

AI is no longer a single workload. It’s training, fine-tuning, inference at scale, and on-device personalization—each with different compute profiles and economics. This makes chip choices strategic:

-

In data centers, availability of accelerators dictates how fast you train and how much you pay per token or per batch.

-

In consumer devices, NPUs move assistants and productivity features on-device for speed and privacy, lowering cloud costs and latency.

-

In enterprises, software ecosystem maturity determines whether projects deploy in weeks or linger in proof-of-concept purgatory.

A recurring pattern in 2025: speed + cost + software lock-in. NVIDIA’s lead in GPUs and CUDA means a massive base of libraries, models, and dev expertise. Intel’s play is to undercut total cost of ownership (TCO) on standardized inference/fine-tune workloads with Gaudi accelerators and to flood the market with AI PCs using NPUs for everyday productivity. Those different bets create real trade-offs: pay for the shortest path to production (NVIDIA) versus optimize cost at scale where workloads are repeatable (Intel).

👑 NVIDIA’s Position: GPU King of AI

The beating heart of NVIDIA’s advantage is CUDA—a software moat built over a decade of developer tooling, libraries (cuDNN, NCCL), and compatibility across frameworks. When a lab posts a breakthrough model recipe, chances are it was trained on NVIDIA GPUs and published with CUDA-friendly instructions. That top-to-bottom developer experience translates into lower integration friction and faster ramp-up.

On the hardware front, H100 has been the workhorse for large-scale training and high-throughput inference. In 2025, the conversation increasingly orbits the Blackwell generation (often discussed as B-series parts) promising big leaps in FLOPS/Watt and memory bandwidth—exact specs vary by SKU, but the thrust is clear: more performance per rack, better efficiency, and tighter coupling with high-speed interconnects. For practitioners, that means denser clusters, better scaling, and fewer architectural compromises in pipeline or tensor parallelism.

NVIDIA’s vertically integrated software—from Triton Inference Server to TensorRT and NeMo—remains a force multiplier. Teams repeatedly report that time-to-first-token on NVIDIA is consistently shorter, and that ops tooling (profilers, debuggers, schedulers) reduces the “mystery time” in performance tuning. Add to that a mature ecosystem of integrators and cloud options and it’s easy to see why many organizations default to green.

💡 Nerd Tip: If your roadmap involves rapid iteration on cutting-edge architectures (MoE variants, diffusion hybrids, longer-context LLMs), the safest path is still CUDA-land—you’ll find more working examples, more seasoned MLOps talent, and more predictable performance tuning guides.

🥊 Intel’s Counterattack: Gaudi, NPUs & a Foundry Bet

Intel’s strategy is two-pronged:

-

Data Center Accelerators (Gaudi family)

Intel positions Gaudi accelerators as a cost-efficient alternative for common training and high-volume inference. The pitch: competitive throughput on standardized LLM and vision workloads, lower TCO (especially under long-running contracts), and open software stacks targeting portability. With Gaudi3 entering the conversation, Intel emphasizes improved BF16/FP8 throughput, larger memory bandwidth, and a tighter compiler/runtime toolchain. The reality in the trenches: for repeatable models (e.g., popular LLaMA variants, Stable Diffusion families, standard encoder-decoder tasks), teams report promising price/performance. For frontier research or bespoke kernels, CUDA still wins on ecosystem maturity. -

AI PCs (Core Ultra / Meteor Lake & beyond)

Intel’s NPU push on consumer and enterprise laptops turns AI features—transcription, background removal, on-device summarization—into standard OS capabilities. This is about latency, privacy, and battery life. By moving inference to NPUs, companies can trim cloud spend and deliver always-on AI. For marketers and IT buyers, that translates to tangible ROI across fleets: meeting notes, assistive editing, helpdesk copilots, all local-first.

Layered above both is Intel’s foundry pivot and partnerships. The more credible its manufacturing roadmaps and packaging (e.g., advanced 3D stacking, chiplets), the easier it becomes to promise availability, price stability, and performance growth. In 2025, that message resonates with enterprises burned by 2023–2024’s capacity shocks.

💡 Nerd Tip: If your workload is standardizable and you’re cost-sensitive at scale, pilot on Gaudi while maintaining CUDA portability in your pipeline definitions. Aim for framework parity and containerized builds from day one.

⚙️ Comparing Architectures & Ecosystems (GPUs vs Accelerators vs NPUs)

At a high level, GPUs shine in generality: massive parallelism, rich kernels, and the benefit of being the default target for most research code. AI accelerators (like Gaudi) trade some of that generality for sustained throughput and IO efficiency on common tensor ops—think of them as production workhorses. NPUs are about ultra-efficient on-device inference for everyday tasks.

Software stacks are the crux:

-

NVIDIA CUDA: Premier developer experience, best-in-class tooling, and deep framework integrations.

-

Intel oneAPI/OpenVINO: Pushes portability across CPUs, GPUs, NPUs, and accelerators. For inference pipelines that live across edge + cloud, this matters.

-

Framework support: PyTorch/TensorFlow are first-class on CUDA; ONNX and OpenVINO bridges help Intel compete on portability.

From a team dynamics lens, CUDA’s advantage means less time re-writing kernels and more time experimenting. On the other hand, ops leaders chasing hard savings can justify learning curves if TCO drops 15–30% on fixed recipes. In 2025, we see more hybrid stacks: train on NVIDIA, serve inference on Intel (or mix with CPUs/NPUs at the edge).

📊 Market Dynamics in 2025

Demand remains white-hot. Model sizes, context windows, and retrieval workloads keep pushing compute up and to the right. NVIDIA enjoys a massive install base and continued cloud priority, which keeps CUDA “default-true.” Intel gains ground by attacking availability and cost, especially with enterprise buyers who value predictability over chasing the last 5% of speed.

From a risk perspective:

-

Supply chain is healthier than 2023, but priority access still favors hyperscalers.

-

Cost volatility persists in GPU instances; accelerators with long-term contracts appeal to CFOs.

-

Ops efficiency (utilization, scheduling, mixed precision) often yields bigger gains than switching silicon—don’t neglect the software layer.

💡 Nerd Tip: Before you switch hardware, audit utilization. Many teams discover that tokenization, data pipelines, or suboptimal sharding are the actual bottlenecks.

🧩 Who’s Winning Where?

Data Centers (training + large-scale inference):

NVIDIA leads. CUDA depth, mature libraries, and cluster-level orchestration make it the default for ambitious model R&D and high-stakes launches. If your team must iterate fast on novel architectures, the “answer density” on Stack Overflow, GitHub, and internal playbooks skews green.

Enterprise Inference at Scale:

Contested. If your workloads are standardized (e.g., a family of LLMs for support/chat, a set of vision models for QA), Intel Gaudi can present a compelling cost/perf case, especially under long-term agreements. Hybrid approaches—train or fine-tune on NVIDIA, deploy high-throughput inference on Intel—are increasingly common.

AI PCs and On-Device:

Intel pushes hard. With NPUs shipping in large volumes on Windows laptops, enterprise AI features turn “always available.” This changes UX expectations: transcription starts local-first, camera AI is battery-friendly, and privacy posture improves. For IT, the draw is clear: fewer cloud hits, lower latency.

Consumer Graphics & Prosumer AI:

NVIDIA’s GeForce line (and halo products like RTX 5090 for the ultra-enthusiast) still dominates mindshare. If you care about gaming + occasional AI on the same rig, green is the comfortable pick. See zNVIDIA RTX 5090 Review for that angle.

Stay Ahead of the AI Chip Race

We’re tracking NVIDIA GPUs, Intel Gaudi accelerators, and NPUs across edge and cloud. Get our latest deep dives, benchmarks, and buying frameworks before your next hardware decision.

🧪 Side-by-Side Snapshot (2025)

| Dimension | NVIDIA (GPUs + CUDA) | Intel (Gaudi + NPUs) |

|---|---|---|

| Dev Experience | Deepest ecosystem, abundant tutorials, top-tier profilers | Improving toolchain; strong on inference pipelines with OpenVINO/ONNX |

| Training Frontier | Default choice for frontier research; fastest path to SOTA | Viable on standard LLM/CV; less common for bleeding-edge kernels |

| Inference at Scale | Excellent with TensorRT/Triton; higher instance costs in some clouds | Competitive price/perf for standardized workloads; contracts improve TCO |

| Availability | High demand; capacity can be tight | Attractive where supply is reserved via partners; stable pricing appeals |

| On-Device AI | GPU/RT cores matter for creators; limited NPU footprint | Broad NPU deployment on AI PCs; local features change UX & cost |

| Lock-in Risk | High (CUDA moats) | Lower if you target ONNX/OpenVINO/oneAPI portability |

| Best Fit | Rapid iteration, cutting-edge research, mixed media AI | Cost-efficient inference, enterprise fleets, AI PC enablement |

💡 Nerd Tip: For many teams, the “train on NVIDIA, serve on Intel” pattern yields the best of both worlds—velocity up front, economics at scale.

🧮 Architecture & Software: What Builders Actually Touch

Training stacks in 2025 revolve around PyTorch with a growing set of MoE and longer-context optimizations. On NVIDIA, you’ll find recipes that just work: FlashAttention variants, fused ops, community-tested sharding strategies. On Intel Gaudi, performance is strongest where kernels match popular models and compiler paths are mature; results improve release to release, but bespoke kernels may require extra effort.

Inference stacks are converging on Triton/TensorRT (NVIDIA) and OpenVINO/ONNX Runtime (Intel), with server wrappers for autoscaling and A/B. Teams chasing cost per 1M tokens for LLM inference increasingly explore INT8/FP8 paths; whichever stack you pick, invest in a calibration pipeline—it pays off every month.

🚀 What’s New in 2025 (and Why It Matters)

-

Longer contexts, cheaper tokens: As inference cost dominates, KV-cache tricks and speculative decoding matter more than raw FLOPs alone.

-

Memory is strategy: Whether it’s HBM capacity on GPUs or bandwidth optimizations on accelerators, the biggest wins often come from feeding the beast efficiently.

-

Edge + PC renaissance: With NPUs proliferating, product managers design features around local AI first, cloud optional. Expect more “hybrid intelligence” roadmaps.

💡 Nerd Tip: Add context window and memory bandwidth as first-class criteria in your evaluator spreadsheet—not just FLOPs.

🧩 Use-Case Matchmaking

-

Research labs & model vendors: Stay NVIDIA-first unless cost pressures force a split. Your scientists ship faster with CUDA’s gravity.

-

Enterprise platforms serving millions of inferences/day: Pilot Intel Gaudi clusters for cost leverage. If your model family stabilizes, the savings compound.

-

IT leaders rolling out AI to knowledge workers: Prioritize Intel NPU-equipped PCs; local AI aids adoption and lowers cloud drift.

-

Prosumer creators & gamers dabbling in AI: NVIDIA remains the practical one-box solution.

For a more consumer-graphics-leaning perspective, our NVIDIA RTX 5090 Review breaks down creator/gamer calculus. For the on-device angle across vendors, see On-Device AI Race: Apple, Qualcomm, Intel, NVIDIA—Who’s Winning What?

🧪 Mini Case Study: Swapping to Save

A fast-growing SaaS startup trained its first two LLM variants on an NVIDIA cluster to speed R&D. Once the architecture stabilized, inference costs dwarfed training. They ran a four-week bake-off: ported their serving stack to OpenVINO/ONNX and piloted Intel Gaudi for production inference behind an API gateway. With quantization and batching tuned, they reported notable per-request savings while keeping latency within SLA. Their final setup: NVIDIA for training & fine-tune, Intel for primary inference, with a small NVIDIA slice retained for spiky traffic and experimental models.

💡 Nerd Tip: Bake-offs must be apples to apples—same prompts, same tokenization, same batch windows. Otherwise, the “winner” is just the test.

🛠️ Troubleshooting & Pro Tips

-

Developer lock-in anxiety: Design for portability now. Containerize runtimes, standardize on ONNX exports where possible, and document a “B-plan” pipeline.

-

GPU bill shock: Before switching hardware, fix utilization: right-size batch windows, tighten KV cache reuse, and benchmark INT8/FP8 paths.

-

Compiler confusion on accelerators: Start with reference recipes for common models, then iterate once you’ve matched baseline parity.

-

Hybrid deployments are messy: Use a single feature flagging layer and a shared observability stack so you can A/B across different silicon without chaos.

📐 Comparison Notes (and Where to Read Next)

This article is a duel-focused look at Intel vs NVIDIA. For the broader market dynamics—including AMD and Apple—see The AI Chip Wars. If you’re contemplating on-device strategy, jump to On-Device AI Race: Apple, Qualcomm, Intel, NVIDIA—Who’s Winning What? If your priority is creator/gamer hardware, our NVIDIA RTX 5090 Review goes deeper on graphics.

🧩 Buyer’s Quick-Screen Checklist

-

Confirm framework parity: can you run your current PyTorch/TensorFlow code with minimal changes?

-

Measure cost per 1M tokens (LLM) or per 1k images (CV) under your latency SLOs.

-

Validate vendor availability and lead times before committing roadmaps.

-

Lock in observability (latency, throughput, error rates, utilization) before scaling any new silicon.

-

Decide train vs serve split: it’s common to use different vendors for each.

💡 Nerd Tip: Your “best chip” is often the one that lets your team ship and learn fastest, not the one with the shiniest FLOP figure.

🔮 Future Outlook: Collaboration or Fragmentation?

Three themes define the next 12–18 months:

-

Standardization will creep in at the inference layer—ONNX, OpenVINO, and server abstractions narrow the gap for portable serving.

-

Fragmentation persists at the frontier. New attention mechanisms, longer contexts, and sparsity tricks reward ecosystems with the richest kernel support—NVIDIA benefits here.

-

Enterprises normalize hybrid. Expect RFPs to require multi-vendor support for resilience and negotiating power. Training, fine-tune, and inference may each sit on the best-fit silicon.

For a news pulse on adjacent players (Apple’s vertical on-device strategy, AMD’s server push), bookmark Apple’s Next Big Move in AI Chips and AMD Ryzen 9000 Series.

📬 Want More Smart AI Tips Like This?

Join our free newsletter and get weekly insights on AI hardware roadmaps, MLOps benchmarks, and cost-saving deployment patterns—curated by NerdChips.

🔐 100% privacy. No noise. Just value-packed content tips from NerdChips.

🧠 Nerd Verdict

This isn’t just a market share scuffle—it’s a clash of philosophies. NVIDIA optimizes for frontier velocity with a GPU-first, software-heavy ecosystem that makes new research practical fast. Intel optimizes for per-request economics and ubiquity, pairing Gaudi accelerators for predictable serving costs with NPUs that make AI feel native on every laptop. In 2025, the smartest organizations aren’t “team green” or “team blue”—they are hybrid by design, using each where it drives the most business value.

❓ FAQ: Nerds Ask, We Answer

💬 Would You Bite?

If you had to choose a single direction today, would you pay a premium for CUDA velocity or optimize TCO with Gaudi for standardized inference?

And how much of your roadmap could shift to on-device NPUs without hurting user experience? 👇

Crafted by NerdChips for creators and teams who want their best ideas to travel the world.