🌍 Why 2025 Marks a Turning Point for AI Regulation

Artificial intelligence has moved from niche research to global infrastructure. In 2025, AI is no longer a futuristic concept—it powers customer service, automates financial trading, and even shapes hiring decisions. But with this ubiquity comes risk: bias in algorithms, misuse of generative models, and safety concerns in high-stakes sectors.

Governments worldwide now agree: AI cannot remain the Wild West. Regulation is arriving fast. The challenge is ensuring that laws strike a balance—fostering innovation while protecting society. For businesses, this is not just about compliance; it’s about strategy. For users, it’s about trust.

This is why 2025 feels like a policy inflection point. Just as we analyze emerging AI trends to watch in 2025, it’s clear regulation itself is becoming a trend that shapes the trajectory of technology.

📢 The Push for AI Regulation

The urgency for AI rules stems from rapid adoption. Chatbots like GPT models, AI-powered trading systems, and autonomous decision-making tools are already embedded in daily life. Without oversight, risks escalate—from deepfakes spreading misinformation to biased recruitment software silently excluding candidates.

Public pressure has forced lawmakers to act. The same way AI on Wall Street faces scrutiny for its role in financial markets, governments want frameworks to ensure accountability in every sector. Regulators are no longer asking “if” but “how soon” AI laws should apply.

🇪🇺 Beyond the EU AI Act

The EU AI Act is the world’s first comprehensive AI law and sets the tone for global regulation. It categorizes AI into four risk levels: unacceptable, high, limited, and minimal. Systems that manipulate behavior, enable mass surveillance, or endanger safety may be banned outright. High-risk systems, such as those used in healthcare or law enforcement, face strict oversight.

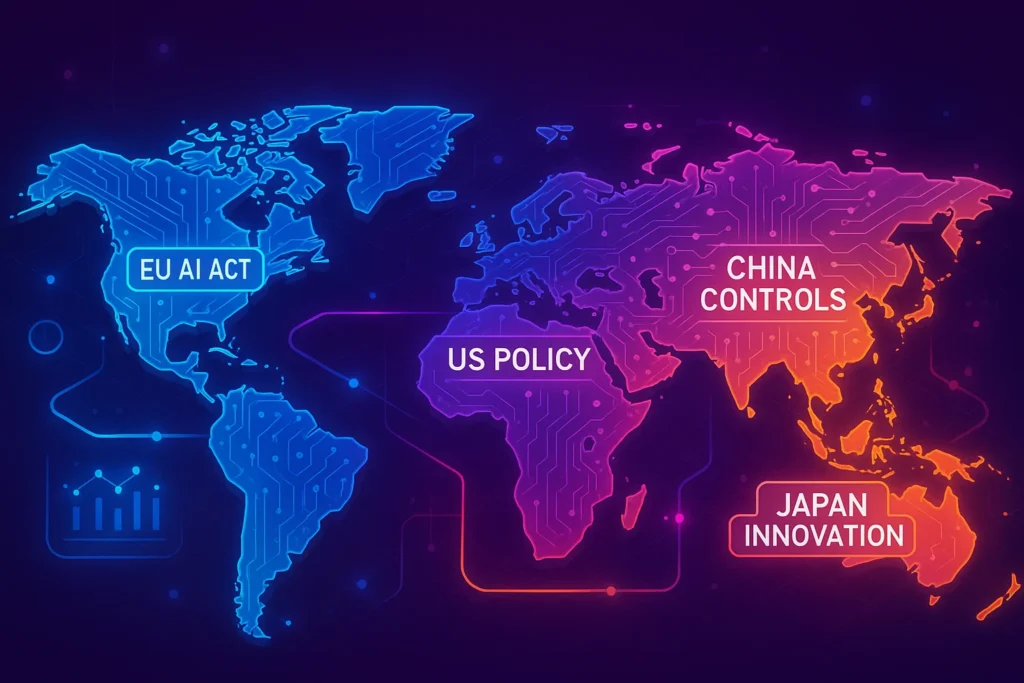

While Europe leads with sweeping rules, other regions are charting their own course:

-

United States: Lawmakers debate sector-specific regulation rather than one overarching law. Expect guidelines around transparency, liability, and ethical AI in government contracts.

-

China: Regulation focuses heavily on state control, ensuring AI aligns with national priorities. Rules require companies to align generative AI with “core socialist values” while supporting industrial competitiveness.

-

Japan: Taking a more innovation-friendly approach, Japan emphasizes industry self-regulation combined with government standards. The aim is to remain a hub for global AI development.

-

Other Regions: Countries like Canada, India, and Brazil are drafting frameworks, often blending EU-style risk classification with local priorities.

These variations mean businesses operating globally must navigate a patchwork of rules.

💼 Impact on Businesses

For companies, AI regulation represents both cost and opportunity. Compliance requires audits, documentation, and explainability in algorithms. Startups may find this burdensome, but transparency can also become a selling point. Customers and investors increasingly favor businesses that build AI responsibly.

Industries that lean heavily on automation—marketing, finance, logistics—will need to rethink workflows. Just as The Future of Work highlights automation’s potential to reshape jobs, regulation may slow reckless adoption but push firms to implement AI more strategically.

One overlooked benefit is risk reduction. Clear rules reduce legal uncertainty, making it easier for businesses to invest in AI without fear of sudden bans. Regulations also level the playing field: responsible companies no longer compete against those cutting corners.

👥 What It Means for Users

For end users, AI regulation promises trust and safety. Imagine applying for a loan and knowing the algorithm must meet transparency standards. Or using a generative AI platform where misuse filters are enforced by law, not just company policy.

Users gain stronger protections against harmful content, discriminatory systems, and misuse of personal data. The EU AI Act, for instance, grants citizens the right to challenge AI-driven decisions that significantly affect them.

At the same time, regulation may also limit access to experimental features. Some tools could become geo-restricted, with companies preferring to launch in regions with lighter oversight. Users may face slower rollouts but safer experiences. This tension between innovation and control mirrors the balance explored in OpenAI’s new GPT features explained simply—where excitement around capability must coexist with responsible guardrails.

📬 Want More AI Policy Insights?

Join our free newsletter for updates on AI trends, regulations, and future-ready business strategies—delivered weekly to your inbox.

🔐 100% privacy. No noise. Only actionable insights on AI and tech policy.

⚖️ Comparison Layer

| Region | Approach | Focus | Impact |

|---|---|---|---|

| EU | Comprehensive law (AI Act) | Risk-based classification, strict oversight | High compliance costs but strong consumer trust |

| US | Sector-specific, fragmented | Transparency, liability, innovation | Slower rollout, patchwork compliance |

| China | State-directed | Alignment with national goals, content control | Innovation within constraints, heavy monitoring |

| Japan | Industry-led with guidance | Flexibility, innovation-first | Business-friendly, softer guardrails |

| Other (Canada, India, Brazil) | Hybrid approaches | Local adaptation of EU-style rules | Growing alignment with global norms |

This comparison shows no single “global AI law” exists. Instead, companies and users must adapt to regional differences, just like they do with data privacy (GDPR vs. U.S. models).

🔮 Looking Ahead

The coming years will define how AI integrates into society. Regulations may feel restrictive in the short term, but long term, they can establish a foundation for sustainable innovation. Businesses that proactively align with ethical frameworks will not only avoid penalties but also build trust with users.

AI laws are likely to evolve, just as technology does. What is high-risk today may be standard tomorrow. The key for both companies and individuals is agility: staying informed and adapting quickly.

In essence, regulation is not the end of AI’s rise—it’s the beginning of its maturity. Just as AI regulation on the rise has been predicted, 2025 confirms that governance is now part of the innovation cycle.

⚡ Ready to Build Smarter Workflows?

Compliance doesn’t have to kill innovation. With AI workflow builders and automation tools, companies can stay efficient while meeting new standards.

🌍 Global Case Studies Layer

The abstract idea of “AI laws” becomes real when you see how industries are already adapting. In the United States, major banks face pressure to audit their lending algorithms. Bias in credit approvals is not only a reputational risk but now a regulatory one, with agencies demanding explainability in every automated decision.

In Europe, hospitals deploying AI for medical imaging must conduct risk assessments under the EU AI Act. A radiology system that misclassifies a tumor isn’t just a technical bug—it’s a high-risk legal violation. These obligations force healthcare providers to work closely with both regulators and technology vendors.

In China, AI companies rolling out generative models must comply with strict rules about “acceptable content.” Models need built-in filters aligned with government priorities, shaping everything from chatbots to creative tools.

These examples show that regulation is no longer theory—it’s reshaping industries in real time.

💼 Business Strategy Framework

How can companies prepare for this new regulatory environment? A three-part framework helps:

-

Governance: Establish internal AI ethics boards to review high-risk applications before deployment.

-

Compliance Tools: Use monitoring software to track algorithmic bias, data quality, and transparency metrics.

-

Risk Management: Develop crisis protocols. If an AI system fails, companies should be ready to explain decisions, recall systems, or compensate affected users.

By embedding these strategies, businesses transform compliance from a burden into a competitive advantage. Transparency can become a trust-building signal for customers and investors alike.

⚖️ Innovation vs Regulation Debate

The heart of the global conversation is whether strict regulation will stifle innovation. European startups worry they’ll fall behind Silicon Valley if compliance costs grow too high. Yet some analysts argue the opposite: clear rules create stability, making investors more willing to fund AI ventures in Europe.

The U.S. benefits from a looser approach, allowing rapid experimentation, but risks public backlash if harms emerge unchecked. China’s model enforces state alignment, which limits free creativity but accelerates industrial AI adoption.

This debate has no single winner—it reveals a trade-off. Regulation sets boundaries, but boundaries can also focus innovation. The companies that thrive will be those that integrate compliance into their core design process, rather than treating it as an afterthought.

🌐 Ethical & Social Impact Angle

AI regulation is not only about business or efficiency. It touches deeper ethical concerns. Laws aim to protect democratic processes from deepfakes, shield citizens from discriminatory algorithms, and prevent militarization of AI in ways that destabilize geopolitics.

For everyday users, this means the rules aren’t just corporate checklists—they are guardrails for fairness, dignity, and social stability. Without regulation, the same tools that generate creative art or automate workflows could just as easily manipulate elections or reinforce systemic inequality.

By embedding ethics into law, societies make a statement: AI must serve people, not the other way around.

🗓️ Mini Timeline

| Year | Milestone | Impact |

|---|---|---|

| 2021 | EU begins drafting the AI Act | First global attempt at comprehensive AI law |

| 2023 | Microsoft Japan tests shorter weeks + AI oversight grows | Public attention on tech-driven work changes |

| 2024 | EU AI Act finalized | Clear risk categories defined |

| 2025 | First fines issued in Europe for AI misuse | Signals global enforcement era |

| 2026+ | US, China, Japan refine regional frameworks | Patchwork of global AI governance takes shape |

This timeline shows regulation is not a single event but an unfolding story.

🧾 User Preparedness Checklist

AI regulation doesn’t only apply to businesses—users also need to adapt. Here’s how everyday people can prepare:

-

Know Your Rights: In regions like the EU, you have the right to contest AI-driven decisions that significantly affect your life.

-

Check Transparency: Prefer services that disclose when AI is used, especially in hiring, lending, or healthcare.

-

Protect Your Data: Be cautious with platforms collecting personal information. Regulation will improve safeguards, but users should remain vigilant.

-

Stay Informed: As laws evolve, understand how they affect tools you rely on—from workplace apps to financial platforms.

This checklist empowers users to be active participants in the AI era, not passive subjects.

🧠 Nerd Verdict

AI regulation is inevitable—and necessary. The debate is no longer about whether laws should exist, but how balanced they will be. For businesses, this is the moment to shift from reactive compliance to proactive strategy. For users, it’s a chance to gain transparency and accountability in systems that increasingly shape their lives.

Verdict: regulation is not a threat to AI, but a catalyst for its responsible growth. The winners will be those who embrace governance as part of innovation, not as its enemy.

❓ Nerds Ask, We Answer

💬 Would You Bite?

Do you think AI regulation will build trust in the technology—or create red tape that stifles innovation? How should lawmakers strike the balance?