🧭 Intro: Why Diagnosis Is the Battleground for AI

Medicine stands or falls on accurate diagnosis. Treatment plans, costs, and outcomes cascade from what we decide a patient has—and how early we catch it. In 2025, diagnostics is the sharp edge of AI in healthcare. Models now read chest X-rays at scale, highlight suspicious lesions in mammograms, score stroke risk from CT angiography, flag dermatologic malignancies from phone photos, and sift pathology whole-slide images (WSI) with billions of pixels. Hospitals report faster triage; rural telehealth programs catch conditions earlier; radiology groups use AI as the first pass, not the last resort.

But the same systems can encode bias, overfit to hospital “tells,” and generate confident errors that are hard to spot under time pressure. Liability remains murky, privacy stakes are high, and poorly governed rollouts can erode trust. This guide narrows entirely to diagnostics—where AI reads, ranks, flags, and predicts—not generic “health tech.” If you want the wider lens after, pair this with AI in Healthcare: Breakthroughs and Challenges and AI in Healthcare: Tech That’s Saving Lives. For long-term context, bookmark AI & Future Tech Predictions for the Next Decade and for the governance layer see AI Ethics & Policy and AI-Powered Cybersecurity.

💡 Nerd Tip: In diagnostics, seconds and specificity are currencies. Design your AI program to buy both—or don’t buy at all.

🩻 Breakthroughs in AI Diagnostics: Where It Works Right Now

AI’s most mature beachhead is medical imaging. Radiology models triage and prioritize studies, annotate suspected findings, and sometimes generate preliminary impressions. Large health systems report that AI triage for chest X-rays, CT stroke protocols, and lung cancer screening reduces time-to-read by 20–40% for urgent studies, with sensitivity gains typically 5–12 percentage points when AI is used as an assist rather than a replacement. Across the market, the majority of regulatory clearances for AI/ML medical devices sit in radiology—~75% of 700+ AI/ML-enabled devices—a signal of both maturity and clinical need.

Pathology is the next big leap. Whole-slide image (WSI) analysis uses vision transformers to scan gigapixel slides for tumor regions, margins, mitotic figures, and molecular proxies. Early adopters use AI to pre-screen negatives, highlight likely positives, and measure tumor burden consistently across sites. In labs where AI pre-screens common negatives, pathologists reclaim 10–25% of time for complex cases without compromising quality.

Dermatology AI moved from consumer novelty to structured triage. High-quality, clinician-overseen capture pipelines (clinic kiosks or guided smartphone capture) cut false positives seen in “in-the-wild” apps, and risk-stratified outputs now guide who needs dermoscopy vs. biopsy. Gains are strongest for rule-out workflows where missing nothing is paramount.

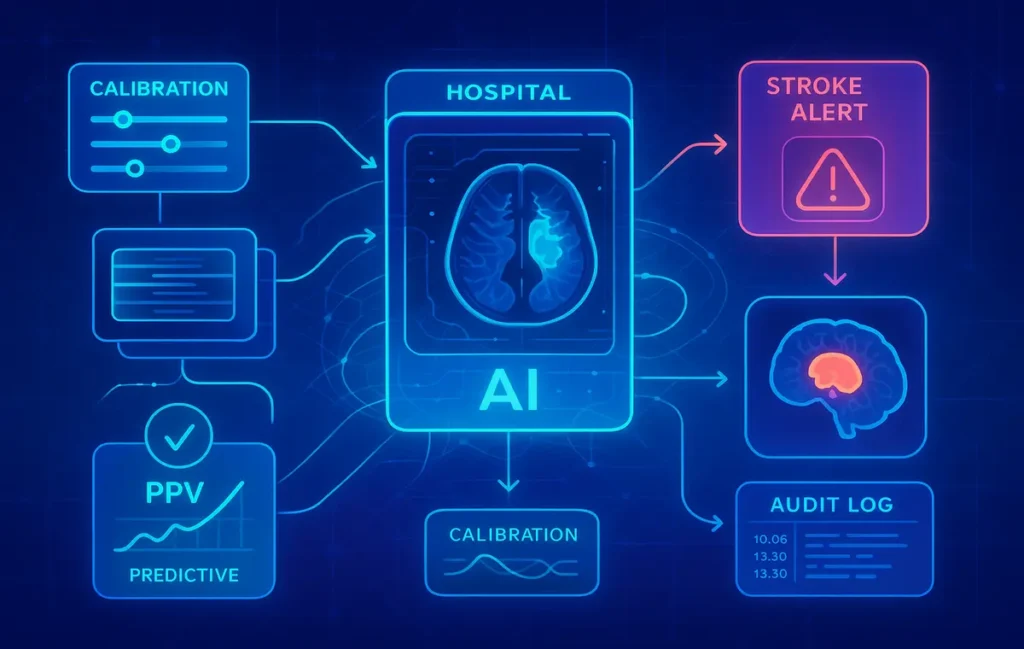

Finally, predictive diagnostics integrate labs, vitals, and longitudinal history to flag early risk of heart failure decompensation, sepsis, or diabetic complications. The best programs don’t announce diagnoses; they surface probabilities and actionable next steps (order this test, schedule follow-up, repeat imaging). Hospitals using well-calibrated early-warning models report 7–15 minutes faster stroke door-to-needle intervals and 5–10% lower unnecessary admissions in pilot units—small numbers that add up across thousands of patients.

💡 Nerd Tip: In diagnostic AI, calibration beats raw AUC. If predicted 20% risk means ~20% actually have the disease, clinicians can plan. Poorly calibrated models erode trust, even with high AUC.

🧪 Real-World Success Stories: From Pilots to Practice

Emergency radiology triage. When AI flags suspected large-vessel occlusion on CT angiography, the case jumps to the top of the worklist, a stroke alert fires, and neuro-interventional teams mobilize sooner. Observational data from large emergency departments show 30–50% faster radiology turn-around for flagged studies and measurable reductions in treatment delay. Even when the AI is wrong, the escalation pathway often surfaces other urgent findings (e.g., hemorrhage) faster.

Lung cancer screening at scale. Programs combining low-dose CT with AI nodule detection reduce overlooked sub-centimeter nodules and standardize follow-up using Lung-RADS criteria. Sites report fewer “lost to follow-up” cases because AI-driven reminders and integrated decision support keep patients on protocol.

Telederm and rural triage. Clinics with limited dermatology access use guided capture + AI risk scoring to decide who needs specialist review. Wait times drop from weeks to days; the dermatologist’s queue is filled with higher-risk cases. A common pattern: AI weeds out obvious benign lesions (with systematic safety nets), while clinicians focus on edge cases.

Digital pathology quality control. Before a pathologist opens a case, an AI pass checks for poor scan focus, tissue folds, and label mismatches. This “boring” use case prevents re-work and avoids downstream diagnostic errors unrelated to the model’s medical judgment.

What’s consistent across the wins? Not “AI as doctor,” but AI as workflow accelerator: reorder, highlight, standardize, and document.

💡 Nerd Tip: The fastest returns often hide in non-glamorous automations—worklist prioritization, quality checks, structured measurements. Chase those before moonshots.

⚠️ Risks & Challenges: Where Things Break

Dataset bias is the most cited—and most misunderstood—risk. Models trained primarily on data from one geography, device vendor, or demographic can generalize poorly elsewhere. Classic example: chest X-ray models learning shortcuts like “portable” markers or hospital-specific tokens that correlate with disease in training but don’t cause it. In deployment, performance drops or flips in subgroups (e.g., skin tone for dermatology, age bands in pediatrics vs. adults). The fix isn’t just “more data”—it’s representative data, site diversity, and subgroup evaluation reported transparently.

Misdiagnosis & liability. When AI suggests “no acute finding” and a clinician concurs, who’s responsible if there was one? Courts and regulators are still catching up, but the safest stance today is retained clinical accountability with documented AI assistance. That means audit logs showing what the model displayed, when, to whom, and what was done next. Policies must define “AI-assisted” vs. “AI-overridden” decisions, especially when AI outputs feed into automated alerts.

Over-reliance on automation. Even great models can nudge users into automation bias—trusting the suggestion over their judgment. Countermeasures include confidence displays, saliency maps (with training on how to interpret them), and “holdout” days where the model is silently off to ensure human vigilance metrics don’t drift.

Privacy & security. Diagnostic AI systems ingest the most sensitive data there is. Risks include re-identification from images, leakage via third-party annotation, and vendor breaches. Encryption at rest and in transit is table stakes. Stronger programs add data minimization, federated learning (where feasible), and zero-trust vendor access. Coordinate with your security team on adversarial risks too; manipulation of DICOM headers or pixel-level attacks can poison AI or mask pathology. (For the full defense layer, see AI-Powered Cybersecurity.)

Drift & domain shift. New scanner firmware, a different contrast protocol, or a population change can silently degrade performance. Without ongoing monitoring—calibration checks, prior-shift detection, periodic revalidation—your “state-of-the-art” model becomes yesterday’s news.

On X, clinicians put it bluntly:

“AI is great at catching what I’d catch on a good day. I need it to help me on bad days, weird cases, and 2 a.m. scans.” — ER radiologist

“Skin AI is amazing for triage but struggles with atypicals. The workflows matter more than the heatmaps.” — Dermatology NP

💡 Nerd Tip: Never deploy without a kill-switch and a fallback protocol. Safety isn’t pessimism—it’s good engineering.

🧭 Regulation & Ethics: Guardrails That Actually Help

Regulators moved from curiosity to cadence. In the US, the FDA’s Software as a Medical Device (SaMD) pathways, including Predetermined Change Control Plans (for learning updates), give vendors a way to ship improvements without full re-clearance—provided they prove safety. The EU MDR leans harder on post-market surveillance and risk classification. Both environments increasingly expect subgroup reporting, not just an average AUC.

Clinically, explainability isn’t a pretty saliency map; it’s decision support you can interrogate. The most useful explanations show what changed the recommendation, link to relevant prior images or labs, and display confidence intervals. Ethical frameworks now stress role clarity (AI as assist, not authority), informed consent where appropriate, and appeal pathways (what happens when patient or clinician disagrees with the model).

Accountability must be more than a PDF policy. In mature programs you’ll see:

-

A named clinical owner for each model (usually a specialty lead).

-

A Model Facts label available to end-users: scope, training data characteristics, known failure modes, performance by subgroup, last validation date.

-

Audit trails that are queryable, not just stored.

-

Incident response playbooks for model errors with patient safety implications.

Want to go deeper on governance? Cross-reference your plan with AI Ethics & Policy for a policy-level checklist you can adapt.

💡 Nerd Tip: Write the Model Facts before you install the model. If you can’t explain it to clinicians, you aren’t ready to deploy.

🧬 The Future: Multimodal Co-Pilots in the Clinical Loop

The “single-modality” era is ending. Next-gen diagnostic AI will integrate images + text + signals—think CT + EHR notes + labs + genomics—to produce case-level suggestions, not just image-level flags. That matters because diagnosis lives in context: a subtle opacity in a high-risk smoker means something different than the same opacity in a young, otherwise healthy patient. Multimodal co-pilots can weight those differences, propose differential diagnoses, and rank next tests with expected utility.

In practice, AI will shift from detection to decision choreography: automatically gathering priors, anchoring comparisons, suggesting structured impression text, and teeing up orders or consults—always with human sign-off. Expect agentic workflows that handle tedious follow-through: scheduling the follow-up CT, generating patient-friendly summaries, or pushing reminders into the patient portal. (If you’re designing these flows, see Agentic AI Workflows and AI Agents vs. Traditional Workflows for architecture patterns.)

We’ll also see training and validation evolve. Synthetic data will help fill rare edge cases; federated networks will pool insights across hospitals without exchanging raw data; and continual learning will adapt models to local quirks—again, under strict change-control. The endgame is not AI replacing specialists but raising the floor of quality and expanding access to high-standard diagnostics globally.

💡 Nerd Tip: Your competitive advantage isn’t the model—it’s the data flywheel and workflow. Systems that learn responsibly from their own outcomes will keep getting better.

🩺 Ready to Ship Diagnostic AI—Safely?

Grab our Clinical AI Safety Pack: a Model Facts template, subgroup validation worksheet, and a go-live checklist tailored for radiology and pathology teams.

🧰 Diagnostic AI Landscape at a Glance (Comparison Table)

| Domain | Typical AI Task | Maturity (2025) | Strength in Practice | Common Failure Mode | Best-Fit Deployment |

|---|---|---|---|---|---|

| Radiology (CT/MRI/X-ray) | Triage, detection, measurement, report assist | High | Worklist speed, consistent measurements, fewer misses on fatigue | Shortcut learning, vendor/device shift | Integrated with PACS/RIS + clear escalation rules |

| Pathology (WSI) | Tumor detection, grading, QC | Medium-High | Negative pre-screen, standardized grading, QC of scans | Slide artifacts, staining variability | Lab pre-screen + pathologist confirmation |

| Dermatology | Risk stratification from images | Medium | Access expansion, good rule-out triage | Poor capture, skin tone bias | Guided capture + clinician review for positives |

| Cardiology | Arrhythmia/echo analysis, early HF risk | Medium | Early alerts, structured measurements | Over-alerting, poor calibration | Alerts with tiered actions + human review |

| Predictive Diagnostics | Early warning from EHR multimodal data | Medium | Earlier detection of deterioration | Drift, confounding | Unit-specific calibration + ongoing monitoring |

🛠️ Deployment Checklist (One Pass Before Go-Live)

-

Clinical owner, safety officer, and IT owner named; kill-switch defined.

-

Site-specific validation shows calibration and subgroup performance.

-

Model Facts label published; users trained on limits and fallbacks.

-

Audit logging + access control configured; privacy impact assessment done.

-

Monitoring dashboard (alerts, PPV, drift) live; incident playbook rehearsed.

💡 Nerd Tip: Track three numbers weekly: % flagged, PPV of flags, time-to-action. If they’re flat or drifting, investigate before clinicians disengage.

🧵 Operational Design: How to Make AI Fit Real Clinical Work

Most failures aren’t model failures—they’re workflow failures. Put the output where attention already lives: directly in PACS with a visual overlay and structured short text, not a separate portal. Keep the number of clicks to confirm or override near zero. If the model flags many false positives, don’t expect clinicians to learn to love it; tune thresholds, or use AI earlier (e.g., reorder studies) rather than later (e.g., demand justification).

Focus on handoffs. A flagged stroke case must automatically notify the right on-call team; a suspicious lung nodule should pre-populate a follow-up plan compliant with guidelines. Build documentation by default—every AI-assisted action leaves a trace so quality teams can audit speed and accuracy without searching inboxes.

Lastly, invest in feedback loops. Make it easy for clinicians to tag model errors. Use those tags for active learning (within your change-control plan), update the Model Facts, and celebrate fixes. When clinicians see their feedback shape the tool, adoption spikes.

💡 Nerd Tip: Start with one high-value pathway, not a dozen: e.g., CT stroke triage in ED or WSI pre-screen in breast pathology. Nail it, measure it, then expand.

📬 Want more clinical AI breakdowns like this?

Join our weekly newsletter for practical guides on deploying AI in diagnostics—governance templates, calibration tips, and real-world case studies from the NerdChips team.

🔐 100% privacy. No noise. Just actionable insights from NerdChips.

🧠 Nerd Verdict

AI diagnostics is a co-pilot revolution, not a replacement coup. The biggest wins come when organizations combine fit-for-purpose models with fit-for-people workflows, measure what matters (calibration, PPV, time-to-action), and maintain relentless governance. Do that and you’ll see earlier detection, faster triage, and more consistent care—without sacrificing safety. That’s the ethos we champion at NerdChips: ship technology that helps clinicians decide faster and better, not just differently.

❓ FAQ: Nerds Ask, We Answer

💬 Would You Bite?

If you could green-light one deployment tomorrow, would you start with radiology triage (CT stroke, chest X-ray) for speed or pathology pre-screen for throughput?

Tell us your setting; we’ll suggest the safest first step. 👇

Crafted by NerdChips for clinicians, builders, and teams who want AI to save minutes—and lives—without losing trust.