Inrto:

YouTube’s latest Made on YouTube announcements weren’t “someday” demos—they’re shippable, useful, and surprisingly creator-centric. If you publish on YouTube, you’re getting new AI in three lanes that actually matter: creation (faster drafting and stylizing), distribution (brand deals and shopping that don’t break the viewer experience), and safety (clear labels, watermarks, and better rights tools). This guide turns each update into a concrete workflow you can run this week.

We’ll cover the headline features—Veo 3 Fast in Shorts, Edit with AI, Speech to Song, flexible brand integrations, direct links for Shorts, expanded Shopping, smarter live, and Studio’s new AI helpers (Ask Studio, Inspiration)—and show where they slot into a modern content system. You’ll also see how the new SynthID watermarking and Likeness detection give you more control over what’s done with your face, voice, and IP. Along the way, we’ll flag smart internal reads you can keep nearby, like YouTube SEO: How to Rank Your Videos and YouTube Analytics Explained for measurement and momentum.

💡 Nerd Tip: Treat these launches like Lego bricks. You don’t need everything at once—assemble a tiny, repeatable stack and compound small wins.

🎥 Veo 3 Fast Arrives in Shorts: From Prompt to Clip, With Sound

Veo 3 Fast is being integrated natively into YouTube Shorts as a free, prompt-to-video generator. The big unlock is speed and proximity: you can sketch an idea directly in the app, then output short, stylized video with accompanying audio—without bouncing across five tools. Beyond plain “text to video,” YouTube’s Veo upgrades let you animate photos, apply style transfers to your footage, and add objects or characters into a scene using text descriptions. For creators who shoot on phones, this is a creative multiplier: you can draft scenes, visualize b-roll alternatives, or punch up a cold open before you ever sit down to edit.

Practically, think of Veo 3 Fast as a pre-viz buddy and hook machine. Use it to prototype intro beats and transitions, test background plates that fit your brand aesthetic, and fill visual gaps in storytelling where you don’t have time to capture new footage. Since you can now conjure “establishing shots” or motion backgrounds on demand, your Shorts stop relying on stock clips that everyone else uses.

Rollout is staged—United States, UK, Canada, Australia, and New Zealand first—so teams outside those markets should plan to piggyback on collaborators or set up a lightweight VPN workflow for drafting (publish from your usual region). All AI-generated segments include SynthID watermarking and content labels. That’s not a nuisance—it’s a trust signal for viewers and a future-proofing move for brand deals.

💡 Nerd Tip: Script hooks with visual verbs (“zoom,” “morph,” “burst,” “split”) you can literally depict via Veo prompts. Write the shot, then render it.

✂️ Edit with AI: Drafting, Rearranging, and Smart Sound in One Pass

“Edit with AI” takes messy raw files and gives you a first draft. It can reorder “moments” (beats with semantic meaning), suggest trims, and stitch a preview that points you to what’s working. It also helps you lay in music and a first-pass voiceover so pacing makes sense before you polish. For most channels, 60–70% of edit time goes to finding the story, not finessing it. Offloading the rough cut frees you to do the human parts: comedic timing, narrative beats, and teaching clarity.

Here’s a practical rhythm: dump your A-roll, mark “keepers” at natural breaths, and let Edit with AI propose the backbone. Accept the spine, then layer your own B-roll and on-screen text. Use the AI’s suggested moment groups as your chapter candidates for long-form and your micro-beats for Shorts. It’s not about “AI doing the edit.” It’s about never starting from an empty timeline again.

Want deeper post-production reading when you move from rough to real? Keep Best AI Editing Tools for YouTube Creators open for caption automation, denoising, and AI-driven cut detection that pair well with YouTube’s native tools.

💡 Nerd Tip: Rename the AI’s “moments” to your teachable beats. The names you give clips often become your chapter titles and community timestamps.

🎵 Speech to Song: Turn Dialogue Into Catchy Hooks for Shorts

YouTube’s “Speech to Song” is delightfully weird—and extremely practical. It takes spoken dialogue and transforms it into a musicalized version fit for Shorts. Think of it as an auto-remix engine that can turn your how-to tip into a sticky chorus or your punchline into a rhythmic drop. For channels that struggle with sound design, this is a cheat code for pattern interruption—the tiny jolt that stops the scroll.

We’ve seen watch-through jump when creators insert a 3–6 second musical “punch” right after the hook line, then return to teaching. Used sparingly, Speech to Song gives you that punch without hiring a composer. And because it works on your actual words, it stays on-brand.

💡 Nerd Tip: Record key lines dry at a steady cadence. Clean inputs produce musical outputs that require less cleanup and hit the beat reliably.

🧱 Quick Comparison: What Each New AI Tool Does Best

| Tool | Core Use | Best For | Creator Win |

|---|---|---|---|

| Veo 3 Fast (Shorts) | Prompt → video + audio; animate photos; add objects; style transfer | Hooks, motion backgrounds, visual gags | Fast pre-viz, unique visuals, fewer stock clips |

| Edit with AI | Rough-cut assembly; rearrange moments; draft music/VO | First pass on long-form; finding story beats | Cuts rough-cut time; better chaptering |

| Speech to Song | Turns spoken lines into musical hooks | Pattern interrupts in Shorts | Memorable beats without custom scoring |

| Ask Studio (Studio) | AI chatbot with personalized channel stats & advice | “What should I make next?” | Decisions from your own data |

| Inspiration Tab (Studio) | New content ideas based on audience behavior | Filling your calendar | On-trend topics with context |

| Likeness Detection | Remove AI videos using your face (YPP members) | Brand safety & identity control | Faster takedowns & trust |

| SynthID Watermark | AI content labeling across outputs | Transparency with audience & brands | Future-proof compliance |

💡 Nerd Tip: Assign each tool a job. Veo = visual hook. Edit with AI = rough cut. Speech to Song = pattern break. Ask Studio = topic choice. That’s your minimal viable stack.

🤝 Brand Collabs, Reimagined: Flexible Sponsorships and Live Shopping That Don’t Break Story

YouTube’s new flexible sponsorship format for long videos matters more than its name suggests. Creators can swap out the sponsorship segment post-publish, or include alternative ad slots to sell into different regions and brands without re-uploading the entire piece. In business terms, your video becomes a living asset: one great tutorial can host a privacy-friendly, audience-appropriate midroll that evolves as your partnerships do.

For Shorts, YouTube is enabling direct links to brand sites so creators can run clear, trackable calls-to-action without clumsy workarounds. Meanwhile, YouTube Shopping is expanding to new markets (including Brazil) with marquee brands joining—and YouTube is rolling out AI-assisted auto-product tagging to identify and label items referenced in videos. Combined, this means your video can mention a product, display it contextually, and route viewers to buy, all within YouTube’s rails.

If you plan to monetize more assertively this year, this is your moment to architect a humane, viewer-first funnel. Anchor with content that genuinely helps, use flexible sponsorship slots to keep messaging fresh, and let Shopping links do the heavy lifting for viewers who want to act now. For macro strategy, skim Video Marketing Trends so your packaging matches how attention behaves this quarter.

💡 Nerd Tip: Write two ad reads per sponsorship: a “universal evergreen” and a “regional remix.” Slot the right one per market using the flexible format to preserve tone and relevance.

⚡ Ready to Build Smarter YouTube Workflows?

Pair YouTube’s new AI with battle-tested creator tools—Descript/CapCut for speed, Resolve or Premiere for polish, TubeBuddy/vidIQ for metadata. Make one system, not a tool pile.

🔴 Better Live: Practice Mode, Playables on Live, and Dual-Format Streaming

Live just became friendlier. Practice mode lets you rehearse a stream privately with all the same scenes, audio chains, and chat overlays before you go public. For creators who fear technical gremlins, this alone raises the ceiling on live quality.

Playables on Live adds a gaming-native twist: you can run playable segments during the stream. Think interactive demos, mini-games between sections, or community challenges. Expect smarter Q&A formats and “play-to-win” sponsor moments that feel organic, not bolted on.

Finally, YouTube is supporting horizontal and vertical simulcast—the same live session in 16:9 and 9:16. If your audience splits across TVs and phones, you no longer have to choose the lesser evil. This also means your post-live repurposing gets easier: the vertical recording is already there for Shorts.

💡 Nerd Tip: Write your run-of-show with a 30-second “clip seam” every 3–5 minutes. Your future editor will thank you when cutting post-live Shorts.

🧠 Studio Upgrades: Ask Studio and the New Inspiration Tab

Inside YouTube Studio, two AI assists are rolling out:

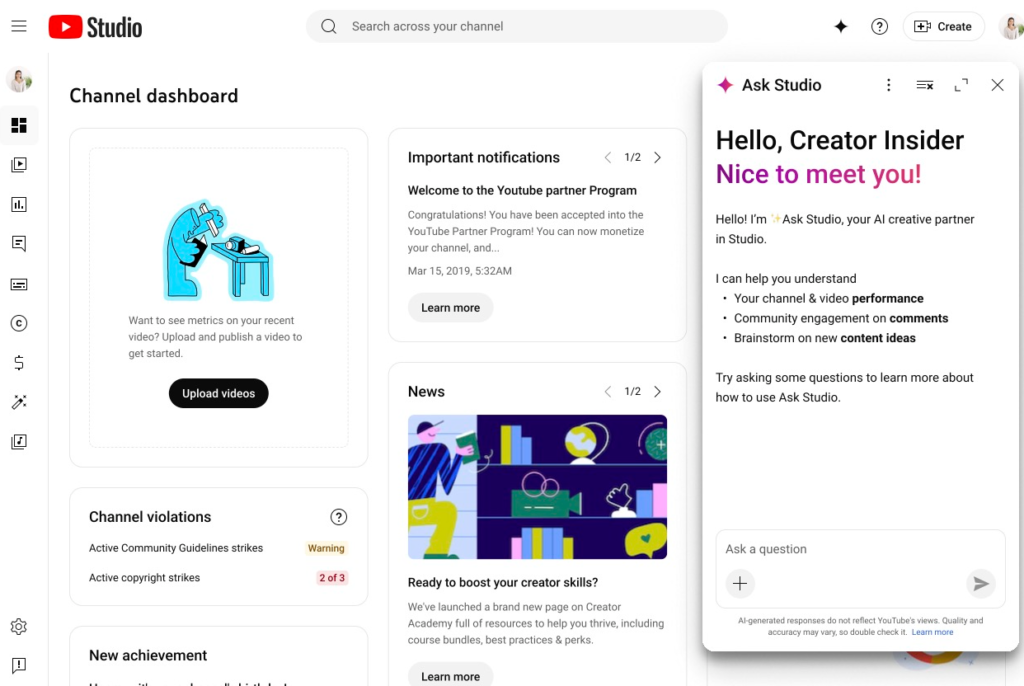

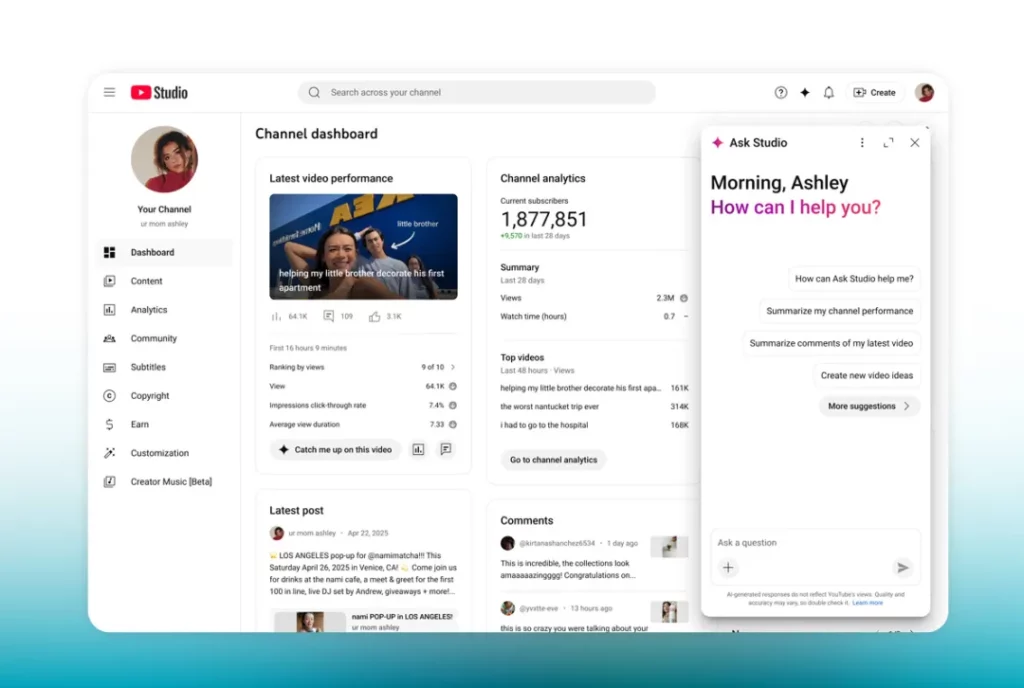

Ask Studio is a chatbot trained on your channel data. Instead of guessing what to post, you can ask: “Which topics saw the best 30-day view velocity?” or “Where do viewers drop off in my last tutorial?” The key is specificity—use it like you’d use an analyst, not a fortune teller. Ask for comparatives (“How did Shorts with on-screen text perform vs without?”) and actionables (“Give me three title frames that match the best-performing structure”).

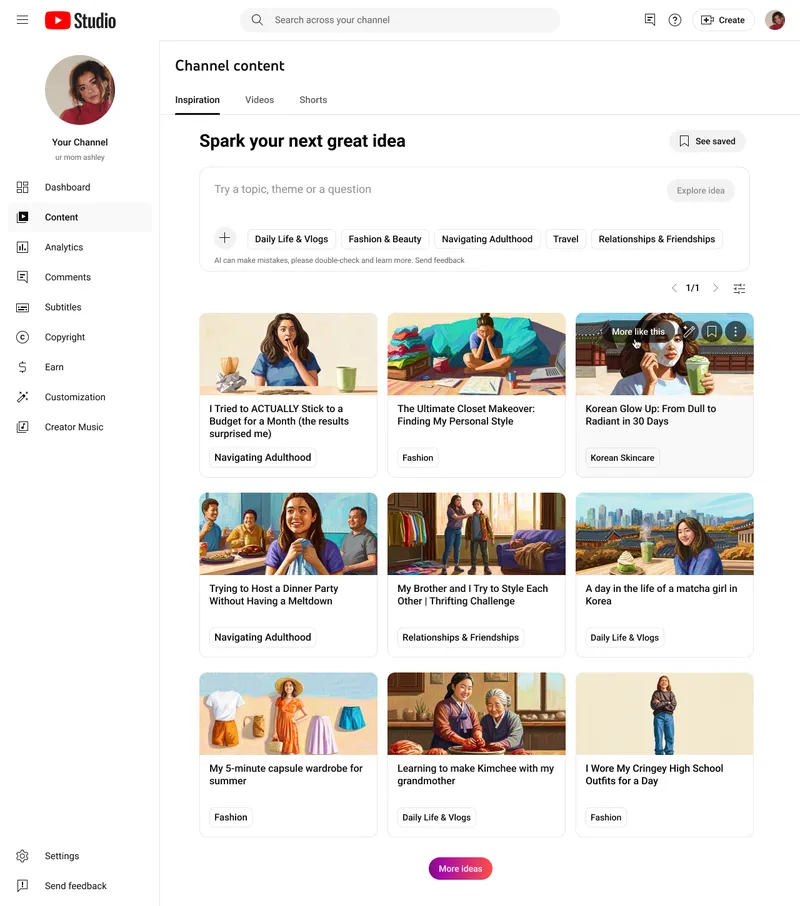

Inspiration is a dedicated tab that proposes content ideas based on audience behavior—what they watch, search, and rewind. It doesn’t just dump trends; it provides context so your ideas feel on brand. Use it to fill blind spots in your calendar, especially for series that need fresh angles every week.

Pair these with your fundamentals: titles and metadata from YouTube SEO: How to Rank Your Videos and performance read-offs using YouTube Analytics Explained. AI-assisted ideation works best when pointed at a clear strategy.

💡 Nerd Tip: Build a weekly Studio ritual. 20 minutes with Ask Studio, 10 minutes shortlisting Inspiration ideas, then lock your next 3 hooks.

🛡️ Safety, Rights, and Transparency: SynthID & Likeness Detection

YouTube is baking trust into the workflow with SynthID AI watermarks and content labels for generated media. That gives viewers and brands a clear signal about what they’re seeing, and it helps creators differentiate stylized segments from documentary footage without awkward disclaimers. Watermarks also pre-empt future policy whiplash. If regulations tighten, labeled content is less likely to get caught in messy retrofits.

On the rights side, Likeness detection for YouTube Partner Program members lets you request takedowns of AI-generated videos that use your face (or “face-like”) without consent. That’s huge. It won’t eliminate misuse overnight, but it gives you a first-party remedy on the platform where your audience actually lives.

💡 Nerd Tip: Add a one-liner in your video descriptions: “AI-generated segments are labeled on-screen.” It’s subtle friction that builds big trust over time.

🛠️ One-Hour Setup Checklist (Print and Stick to Your Monitor)

-

Turn on Practice Mode in your live profile, then run a 5-minute private rehearsal with your full scene stack.

-

In Shorts, draft three 10–20 second Veo 3 Fast hooks for your next video; keep them in a “hooks” bin.

-

In Studio, ask: “Top 3 audience-retained topics last 90 days?” Then queue one idea via Inspiration.

-

Write two sponsorship reads (evergreen + regional), save as templates, and tag them in your CMS.

-

Create a 10-line “Speech to Song” bank—short lines you’ll likely reuse—and record them clean.

(Only use bullets inside these FVL checklists—everywhere else, we stay in full paragraphs.)

🧭 A Creator’s Playbook: Put the New Tools Into a Real Workflow

Here’s a lean, repeatable loop that blends the new tools with what you already do:

-

Outline the hero video (8–15 minutes) with a clean arc and 4–6 chapter seams.

-

Prototype your opener with Veo 3 Fast—generate 2–3 visual variants for the same hook line and pick the one that reads at a glance on mobile.

-

Record and ingest, then run Edit with AI to get a rough spine and a suggested music bed. This isn’t your finish line; it’s a direction finder.

-

Punch up teaching beats and layer human timing. Insert one Speech to Song moment where the tip hits hardest—3–6 seconds, not longer.

-

Ask Studio for a title pattern that matches your channel’s best 30-day view velocity. Draft titles/descriptions, then revise by hand.

-

Export clips seeded by your chapter seams and post three Shorts across the week. For one of them, insert an approved direct brand link if relevant to the lesson.

-

If the long-form is sponsorship-eligible, upload the evergreen and regional ad slots via the flexible format.

-

In your next live, flip Practice Mode on. After the stream, slice three verticals from the built-in 9:16 recording.

-

Check Inspiration for the next angle, then log one insight in your content calendar: which hook line hit, which fell flat, and what you’ll test next.

If you’re optimizing beyond YouTube, this loop fits a broader strategy easily. Use your favorite editor’s AI-enabled features from Best AI Editing Tools for YouTube Creators to move even faster, and map it onto a consistent cadence with YouTube Analytics Explained as your weekly truth-teller.

💡 Nerd Tip: Hooks aren’t just words—they’re pictures. Write them like shot lists, then let Veo visualize before you commit.

📈 Monetization & Measurement: What to Track When Everything Is New

New toys don’t change old truths. Anchor your measurement in three time horizons:

First 24 hours: Hook quality (3-second hold on Shorts, initial CTR on long-form). If a Veo-driven hook underperforms, swap the opening frame and subtitle within the first 2–6 hours. Fast packaging tweaks often rescue good content.

First 7 days: Completion on Shorts (aim for ~60–80% on sub-30s clips), average view duration on long-form (optimize chapter pacing), and end-screen CTR (signal strength of your next step). If your Speech to Song moment spikes replays, note its placement and length.

First 30 days: Sponsorship performance (watch time across ad segment), Shopping link CTR and conversion where enabled, and topic-level retention curves. Ask Studio for comparisons: “Shorts with AI visuals vs. traditional b-roll—any difference in retention?” Export the deltas to your content calendar.

When you’re ready to scale the calendar behind your experiments, tie this measurement rhythm to YouTube Analytics Explained for a weekly, repeatable review.

💡 Nerd Tip: Label every video with three tags in your notes: “Hook type,” “AI feature used,” “CTA type.” Patterns show up fast when you tag consistently.

🧪 Real-World Mini Scenarios (So You Can See It in Action)

Education Channel: A math creator drafts a visual cold open in Veo 3 Fast: a chalkboard morphs into a 3D grid while the hook line appears. Edit with AI assembles a draft, the creator trims for clarity, and inserts a 4-second Speech to Song beat at the sublesson turn. They sell a course via flexible sponsorships, rotating the read when they feature different modules.

Productivity Channel: A workflow coach asks Ask Studio which video structures have the strongest 30-day velocity. Inspiration suggests “automation templates” are trending, so they film a tutorial and use Veo for animated UI backgrounds in Shorts. In the live Q&A, Playables on Live runs a “choose your own automation” game that informs the next video’s outline.

Gaming Channel: A streamer flips on Practice Mode, tests their audio chain, then simulcasts landscape and vertical. The vertical recording feeds three Shorts. Later, Likeness detection helps remove a fake clip using their face pitching a scam.

If you’re also repurposing beyond YouTube, peek at Best AI Writing Assistants for YouTube Scripts for scripting speed, then slot those learnings into a system powered by YouTube SEO: How to Rank Your Videos so each upload stacks on the last.

💡 Nerd Tip: Build your AI use policy and pin it for your community: what you’ll generate, what you’ll never fake, and how you label it. Clarity ≫ controversy.

📬 Want More Smart AI Tips Like This?

Join our free newsletter and get weekly insights on AI tools, no-code apps, and future tech—delivered straight to your inbox. No fluff. Just high-quality content for creators, founders, and future builders.

🔐 100% privacy. No noise. Just value-packed content tips from NerdChips.

🧠 Nerd Verdict

YouTube’s new AI isn’t about replacing your craft—it’s about removing the drag between ideas and finished videos. Veo 3 Fast accelerates visual iteration where you used to stall. Edit with AI takes the heavy lift out of rough cuts so you can spend energy on story and pacing. Speech to Song gives you pattern interrupts that help Shorts breathe. Studio upgrades sharpen decisions using your data, and the business-side improvements (flexible sponsorships, direct links, Shopping expansion) make each upload a better asset. Safety features (SynthID, Likeness detection) signal a platform that wants AI to be usable and accountable.

If you adopt even two of these in a tight loop and review results weekly, you’ll feel the compounding effect within a month. That’s the NerdChips way: systems over sprints, clarity over chaos.

❓ FAQ: Nerds Ask, We Answer

💬 Would You Bite?

If you could automate one part of your YouTube workflow this week, what would make the biggest difference—hooks, rough cuts, or analytics insight?

Tell us your choice and we’ll share a 3-step game plan tailored to it in a future NerdChips post.

Crafted by NerdChips for creators who want AI to amplify craft—not replace it.